Learning-Based Ultra-Low-Power Optimization for VLSI Architectures

DOI:

https://doi.org/10.31838/JVCS/07.01.15Keywords:

SoC Power Optimization,, Deep Reinforcement Learning (DRL),, Low power architecture designAbstract

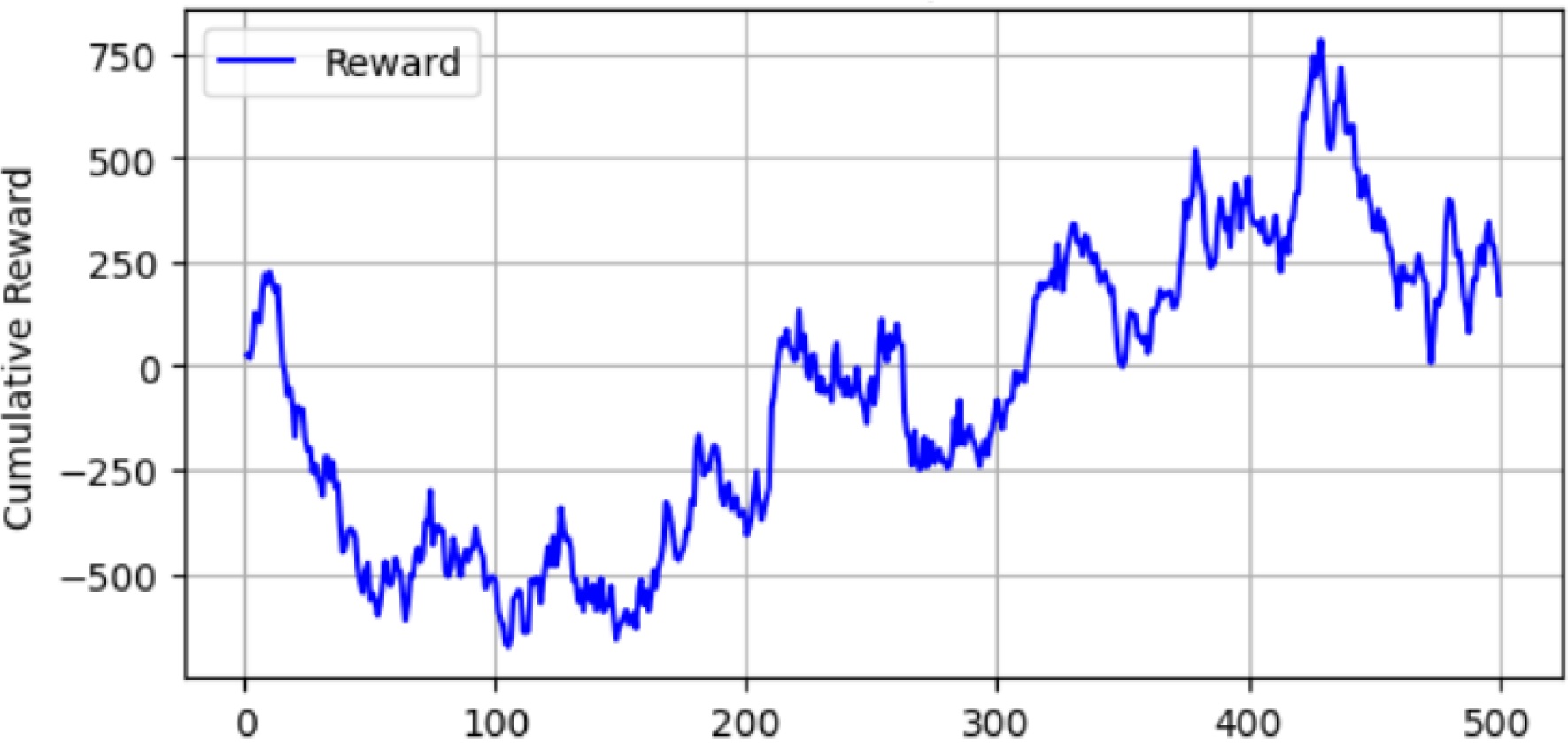

Complicated and dynamic design environments in edge computing, IoT devices, and wearable electronics present significant challenges for the growing demand for ultra-low-power Very-Large-Scale Integration (VLSI) architectures. These systems must manage a wide range of parameters—including voltage scaling, clock gating, power gating, transistor-level optimizations, memory subsystem configurations, and interconnect designs. The optimization process is further complicated by emerging issues such as process variation, aging effects, thermal constraints, and workload variability. Traditional optimization methods often struggle to keep up with these evolving demands and highlight the need for new strategies. A major challenge is the requirement to adjust system configurations in real time while balancing multiple, often conflicting objectives. Effectively navigating this complex design space calls for a robust, scalable, and adaptive optimization methodology. This work focuses on addressing these challenges through a structured framework aimed at achieving ultra-low-power VLSI design. Deep Reinforcement Learning (DRL) helps to maximize important design parameters, including voltage levels, clock frequencies, core usage, workload scheduling, and memory configurations, by treating the VLSI design process as a sequential decision-making issue. Validating the efficiency of the suggested technology, experimental results reveal that it provides over 22% power reduction over conventional Dynamic Voltage and Frequency Scaling (DVFS) methodologies.