Energy-Aware Physical Synthesis of Deep Neural Networks for Edge-AI Applications in Robotics and VLSI Systems

DOI:

https://doi.org/10.31838/JVCS/07.01.23Keywords:

Edge AI, Energy-Aware Physical Synthesis, VLSI/SoC, Quantization, RoboticsAbstract

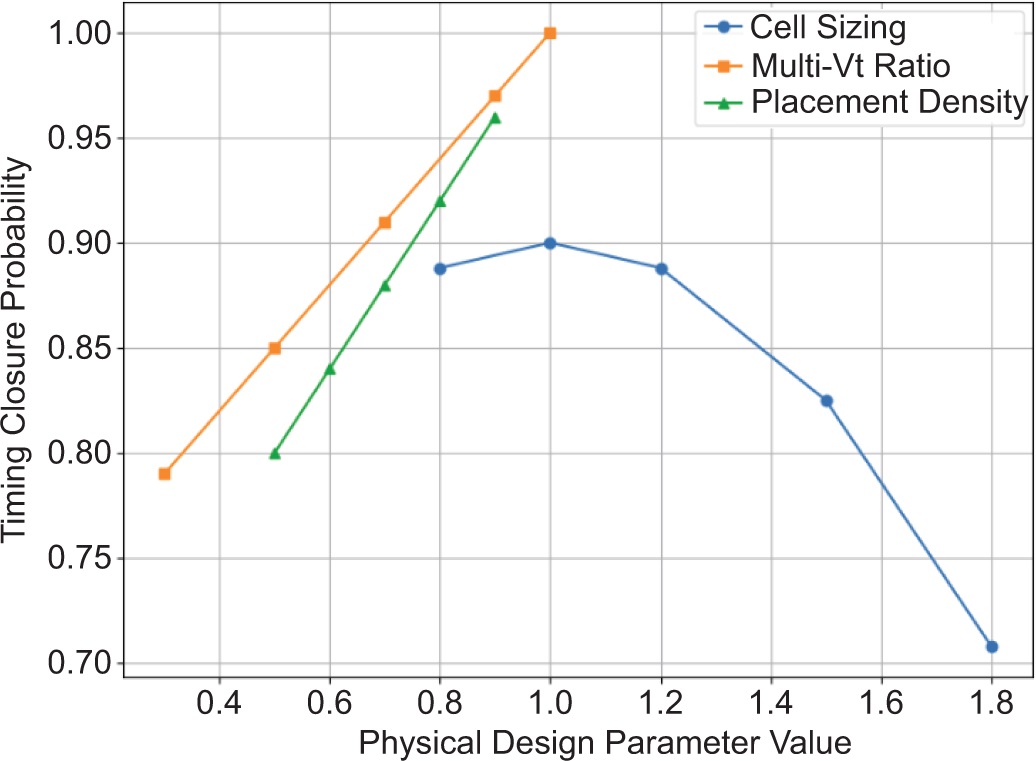

Energy-aware physical synthesis is essential for deploying deep neural networks (DNNs) in edge-AI applications for robotics and VLSI systems, where stringent power, area, and latency constraints prevail. Beyond algorithm- and software-level optimizations such as network compression and compiler techniques, the physical effects during VLSI implementation including clock tree synthesis, routing congestion, IR-drop, cell sizing, and placement significantly influence energy consumption and timing closure. This work presents a unified framework that co-optimizes DNN architectural features (including quantization, sparsity, and operator tiling) with physical design choices, such as multi-Vt selection, sizing, placement strategies, activity-driven buffering, and CTS. The proposed methodology formulates a multi-objective optimization targeting minimum energy at iso-throughput, meeting timing and area constraints via analytic models and sign-off calibrated power estimates. A co-design loop iteratively reshapes the network and refines physical synthesis using sensitivity to activity factors and critical-path slack. The framework is validated with prototype RTL for representative CNN/transformer blocks, implemented on open PDKs and evaluated on FPGA/SoC testbeds for mobile robotics scenarios. Results demonstrate 28–41% reductions in energy per inference under constant accuracy and throughput, 12–18% lower leakage with multi-Vt and sizing, and a 1.6× improvement in worst-negative-slack closure probability across 500–800 MHz operation. Ablation studies clarify the impact of quantization-aware placement and activity-weighted CTS. The framework integrates seamlessly with standard EDA flows and IEEE design rules, enabling automated hardware-software co-optimization for practical edge-AI deployments.